I wrote this post for my colleagues who held the mistaken belief that if you looked under the hood of ChatGPT that you would find all the articles in Wikipedia and lots of books and articles on the internet. This is not so. ChatGPT is only 175 gigabytes. This is a metaphor of how it works.

Posted on LinkedIn on 1/5/24

A Poet’s Guide to ChatGPT: No, AI is not Becoming Sentient, and the Proof it that the File Size is Small

If you’re concerned because BingChat is behaving like a troll, don’t worry. There is no little angry spirit inside a server, thinking and feeling. A more accurate comparison would be a parrot that can mimic English words without understanding their meaning. Similarly, when machine learning generates text, it doesn’t know whether it’s discussing a banana or an elephant; it simply recognizes the statistical likelihood of certain words being strung together. The next word may be predicted with a 99% probability, but the algorithm doesn’t care what it means.

Furthermore, the size of the ChatGPT program is only 175 gigabytes, which may seem small given its vast knowledge. But it’s not holding billions of documents inside.

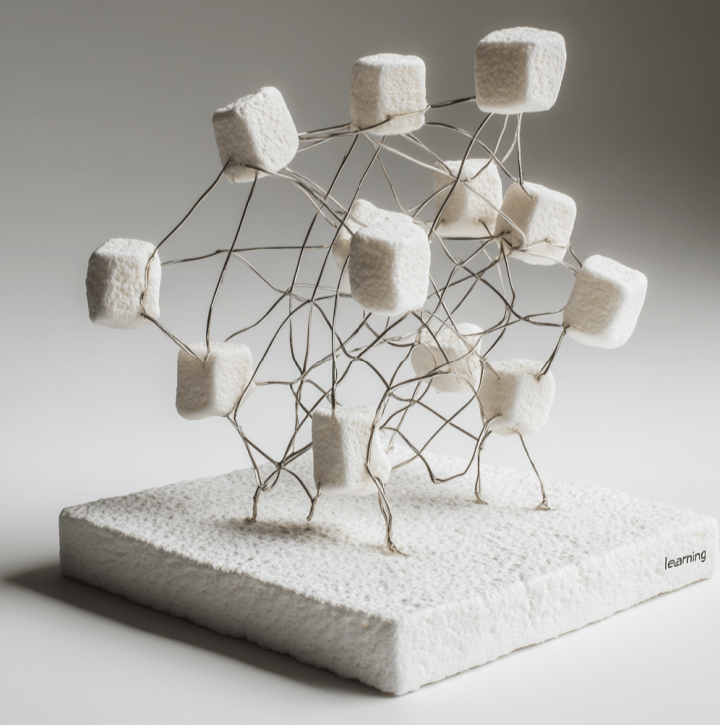

Here is the Marshmallow Metaphor for How it Works

Imagine if a marshmallow represents data, say all the information in a book is one marshmallow. You’ve got 50 marshmallows. Then machine learning is a long, thin aluminum wire. We string all the marshmallows, bend it into a compressed shape, wrap it around, make an intricate lattice that holds all the marshmallows together. The bends in the wire represent the computer “learning” whatever lessons the marshmallows must teach it. That wire armature and marshmallow sculpture is the trained machine learning model after ingesting all the data, looking for patterns in the language.

Now, imagine putting the sculpture in a fire and burning away all the marshmallows, so all that’s left is the armature and some marshmallow residue. That empty, sticky armature is machine learning. It’s ChatGPT. You don’t even need the original training data anymore; you just need the wire armature. The wire is the evidence of machine learning’s learning from the data, but not the data itself.

In essence, the machine learning model is like reading a book and retaining only the lesson it taught you. You don’t need to memorize every word of the book to gain knowledge from it. Similarly, the ChatGPT program has learned from billions of documents, but it doesn’t need to store them all to be effective.

Credit: Joe Born and the book “You Look Like a Thing and I Love You.”

Leave a comment